Optical Flow¶

Part 1 – Optical Flow Calculation:

In this project we will be determining the motion--or rather the displacement of relative points and pixels--between two images. This displacement is known as optical flow and in recent years it has come to be an essential and ubiquitous component of image tracking and computer vision. While approaching this problem, we will be utilizing a few novel tools, methods, and algorithms to find our solution:

- OpenCV

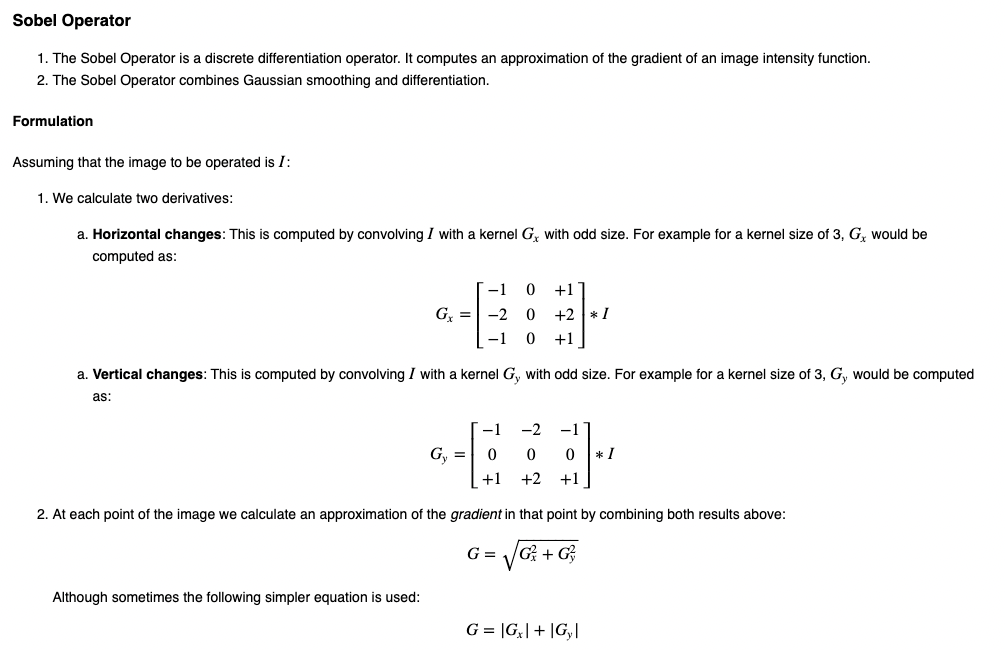

- A Sobel Operator

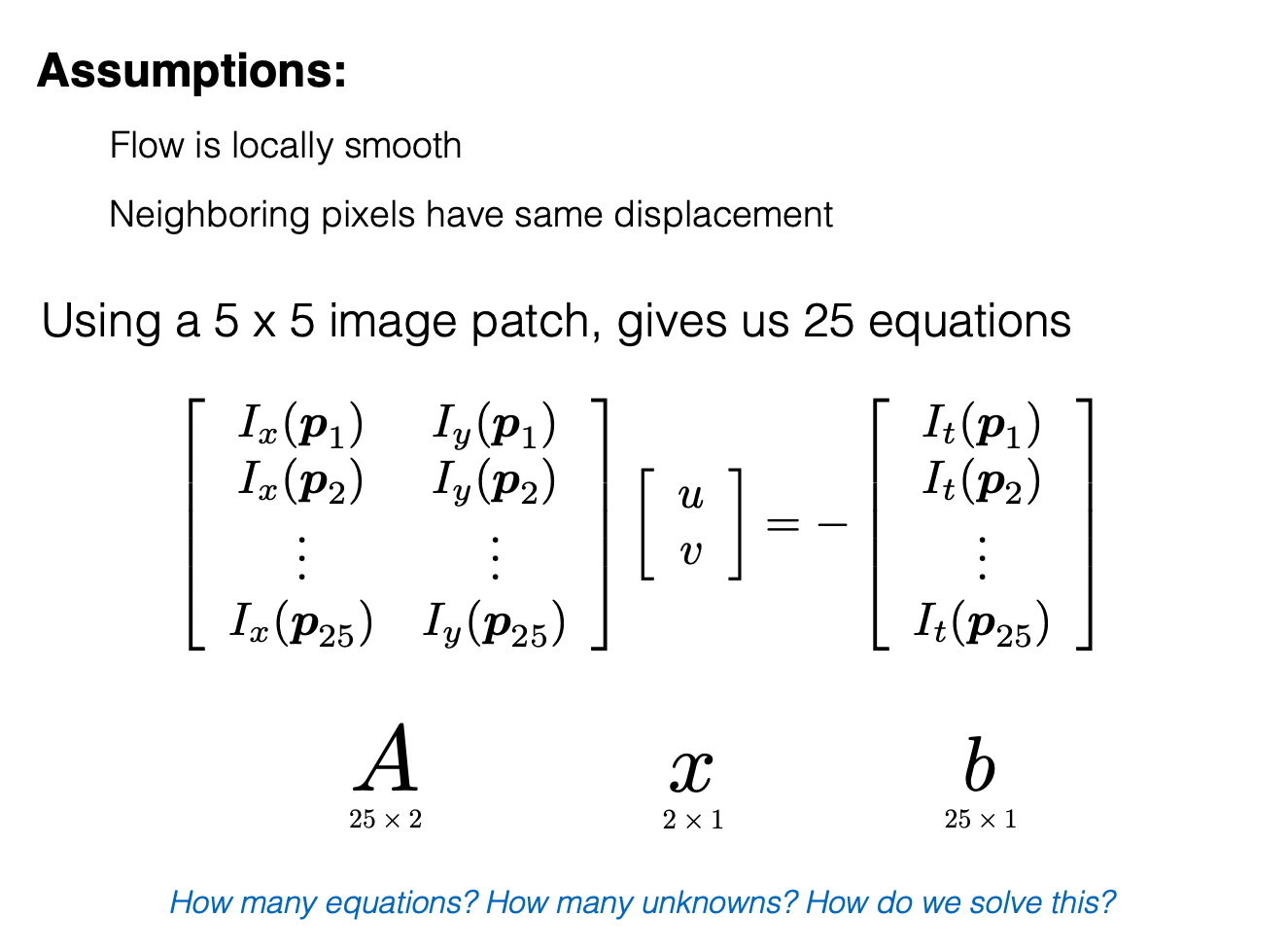

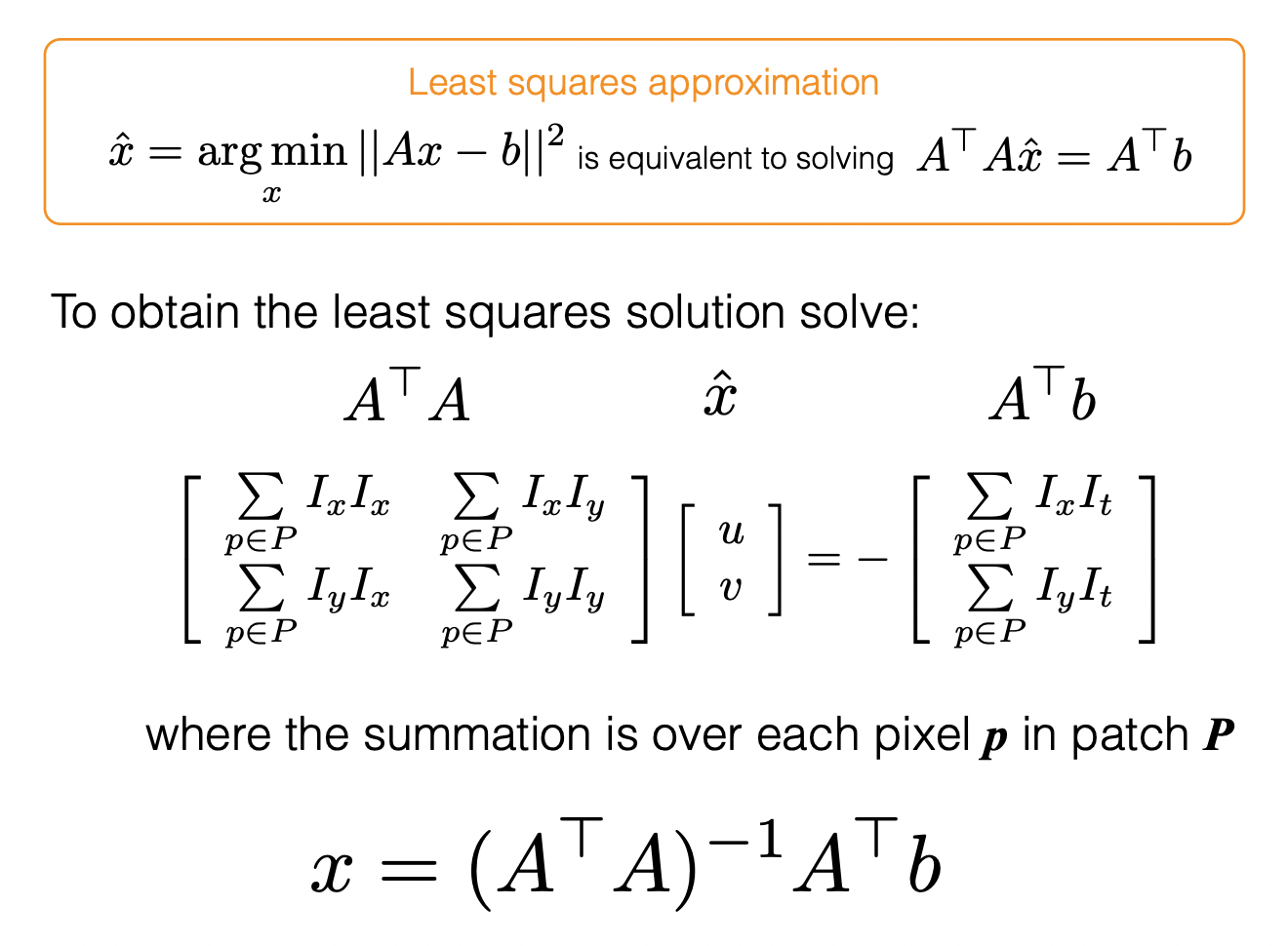

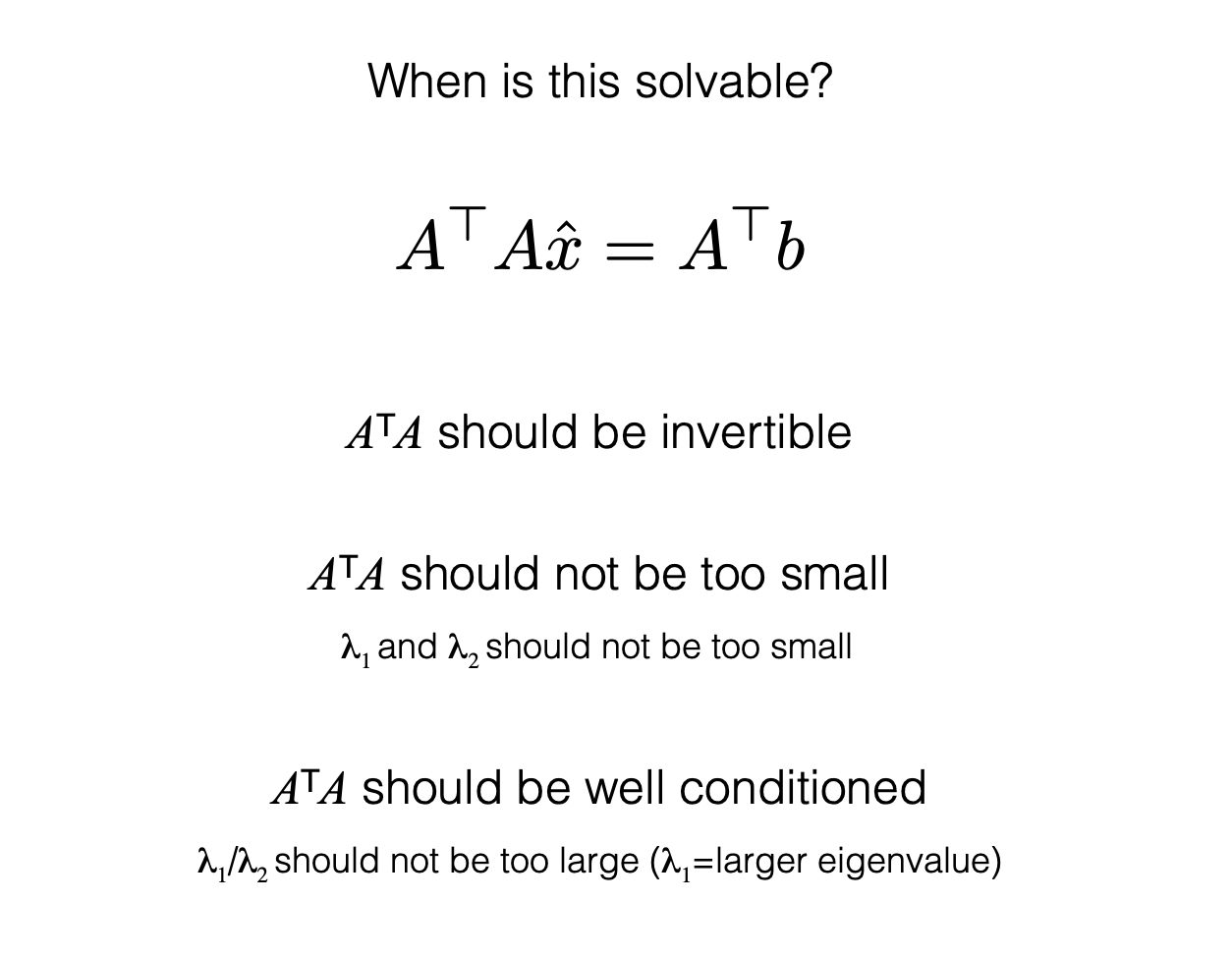

- The Lucas-Kanade Algorithm

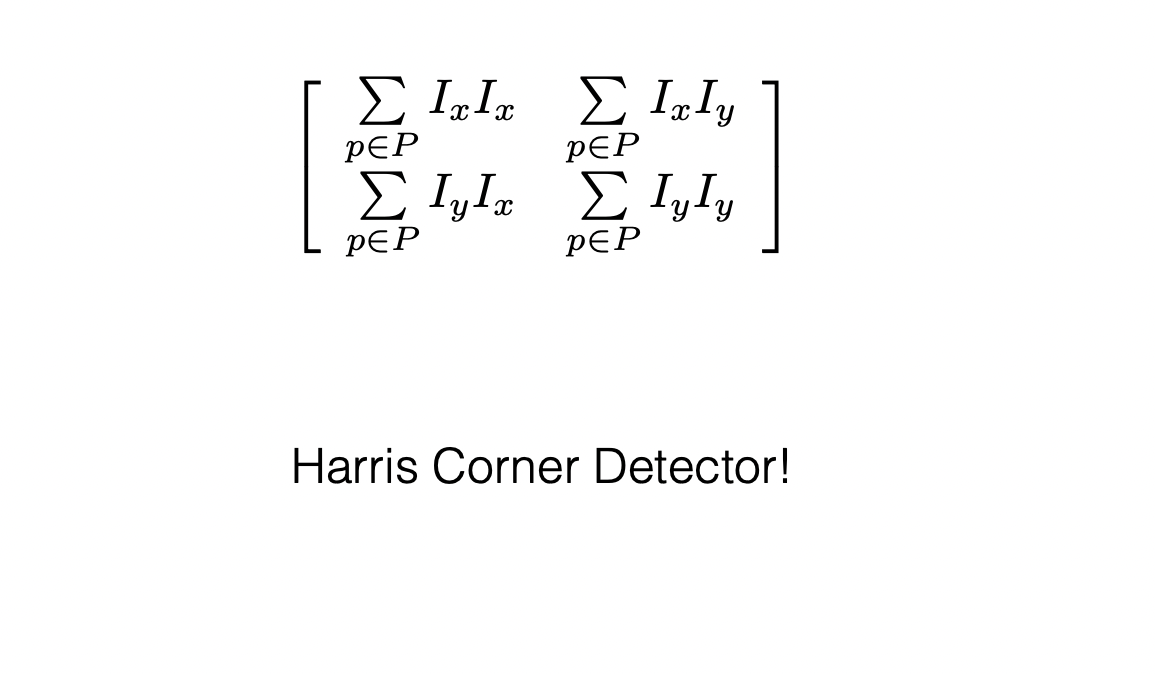

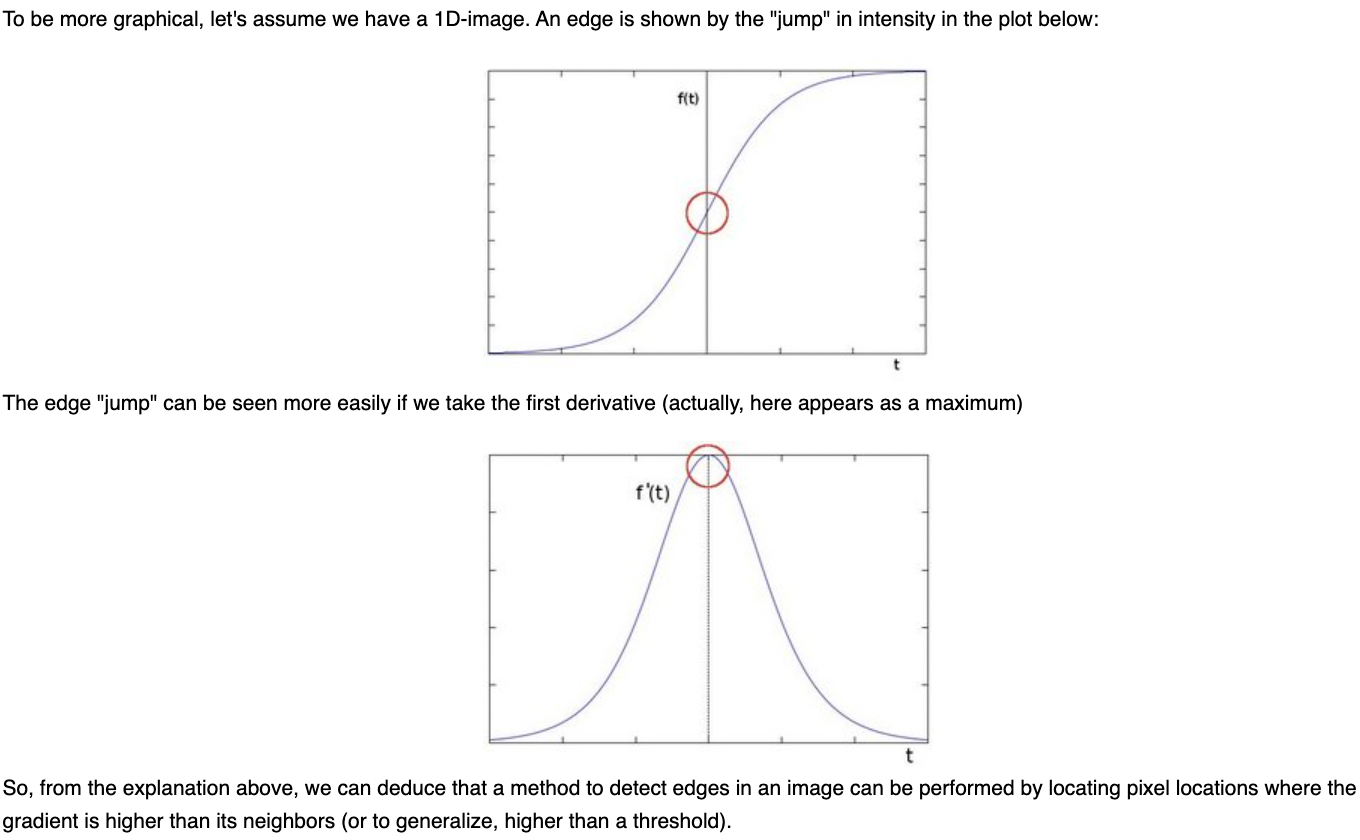

The first step in determining optical flow is edge detection. Where in the past we have used Harrison Corners, today we are utilizing a Sobel operator. This is because the operator quickly and efficiently detects abrupt changes in the x and y direction of the image which we will then differentiate to determine our edges.

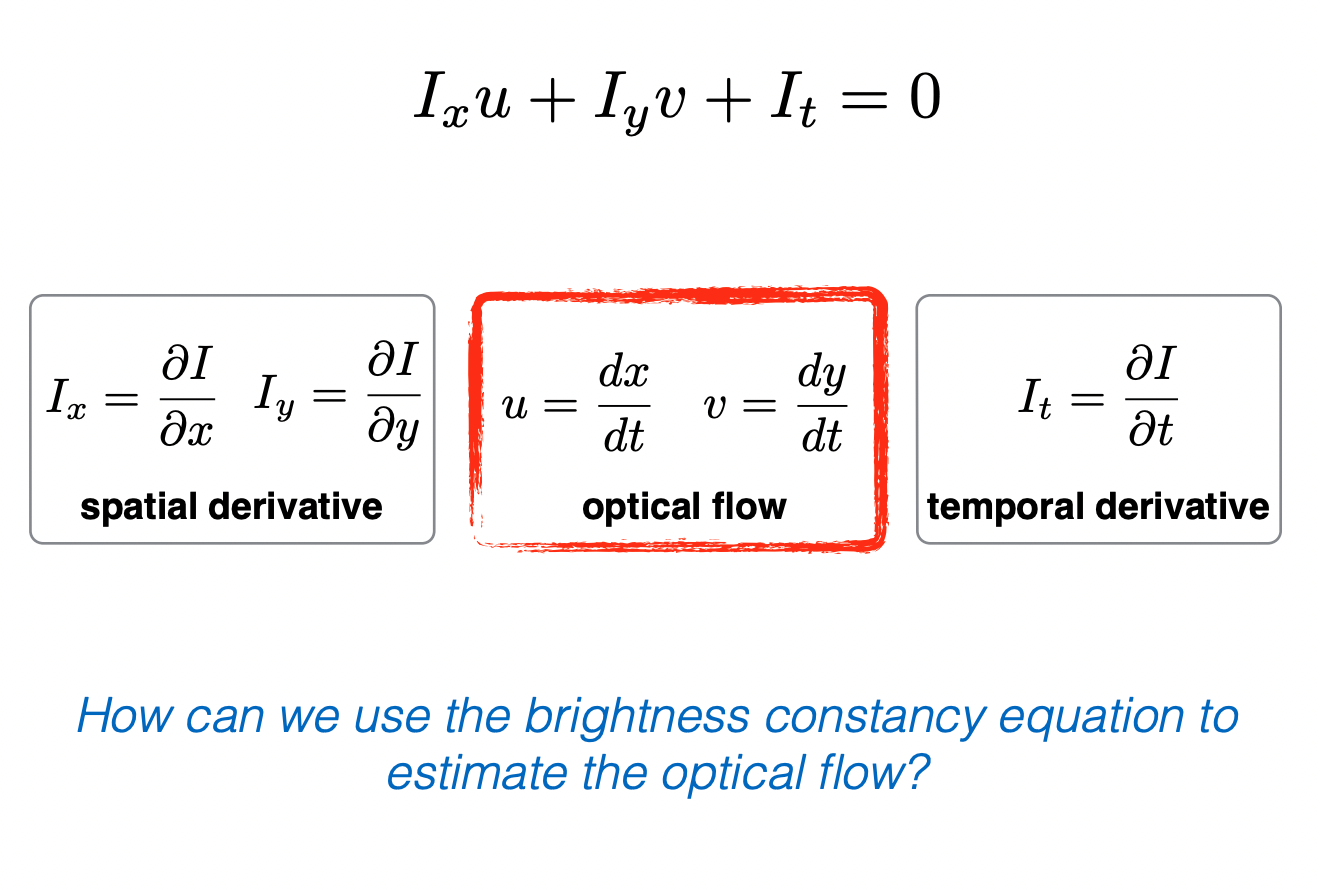

Brightness and its derivitives will be recurring themes when discussing optical flow and computer vision, but even when we consider the fundamental elements of movement itself. Optically, for man and machine, flow can only be determined by either a change in perspective, a change in physical distance, or a visible change in how light reacts to our spatial environment. And this brings us to the Lucas-Kanade Algorithm and its basic assumptions with respect to our two images...

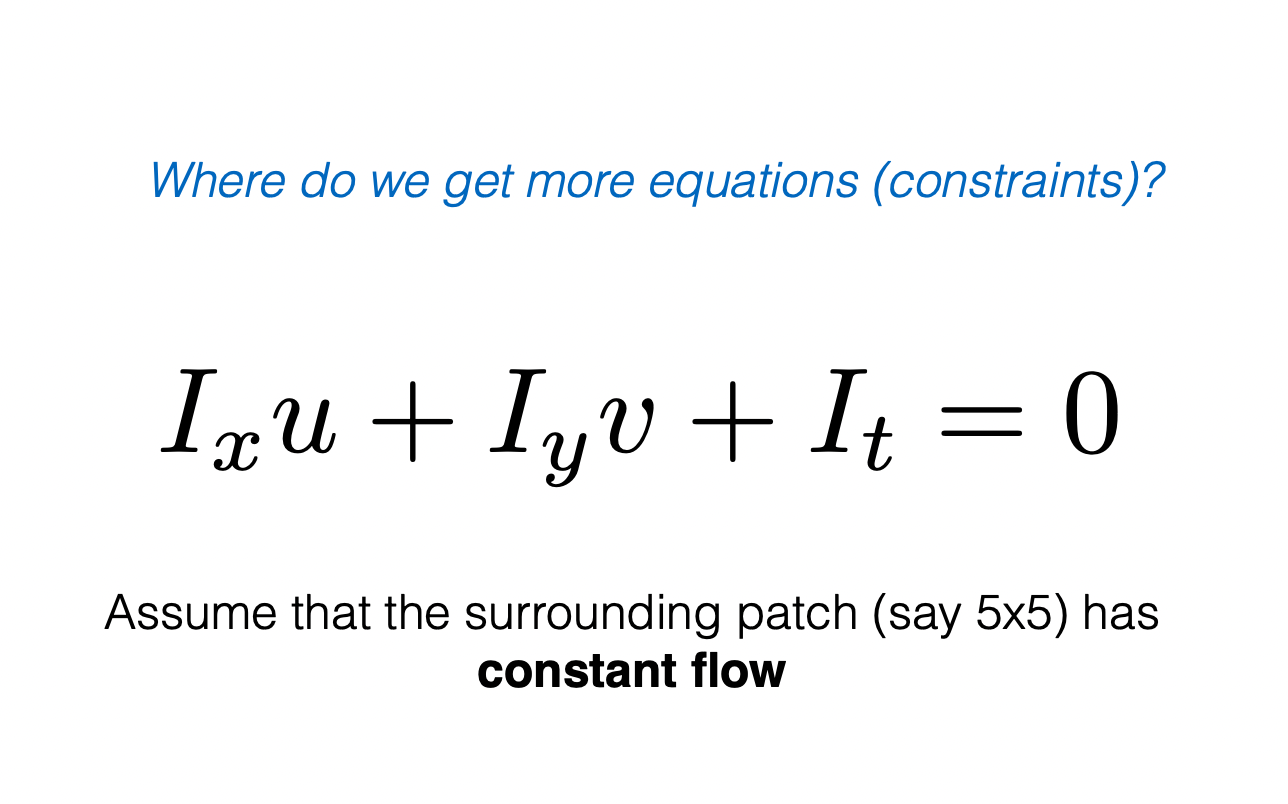

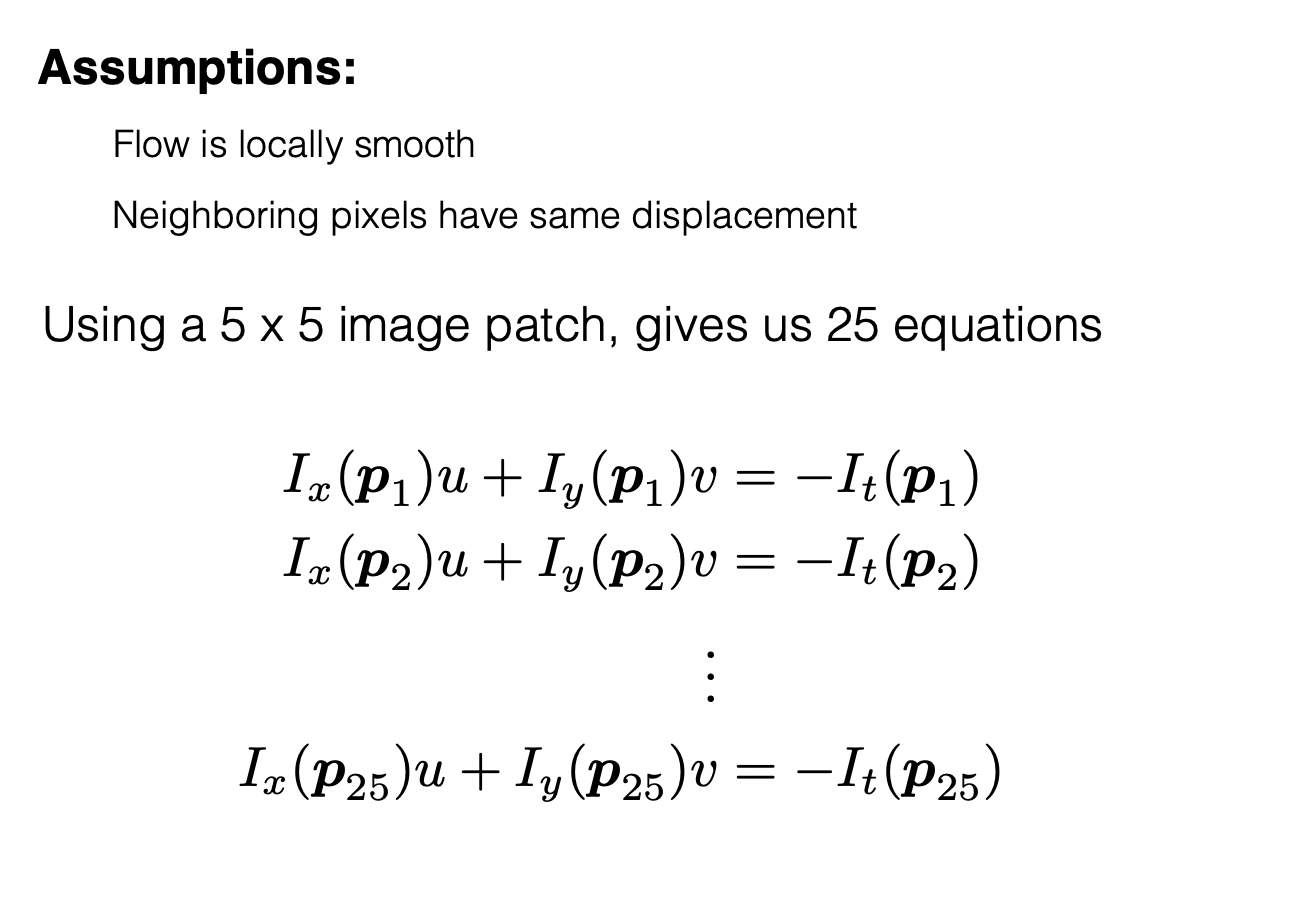

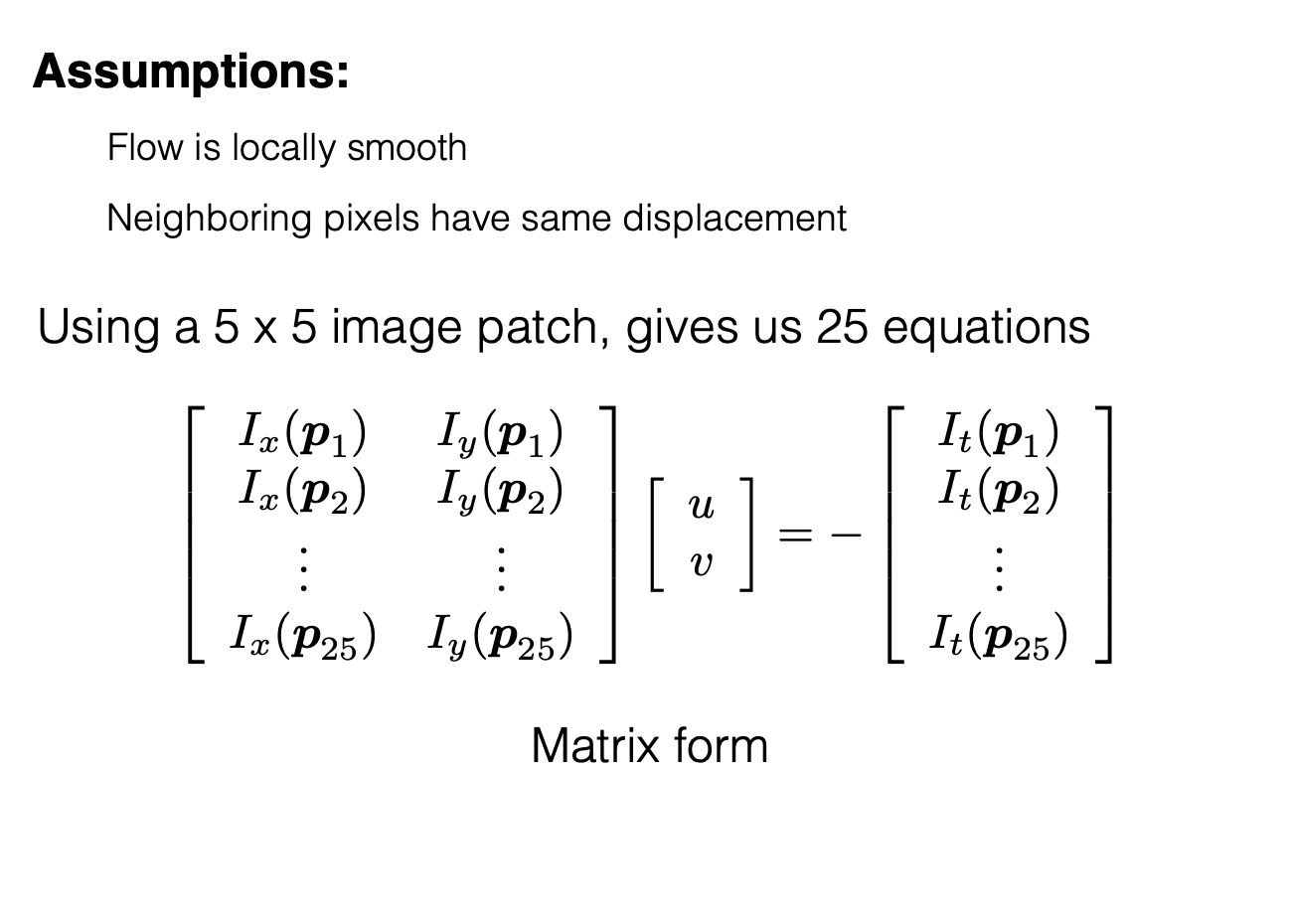

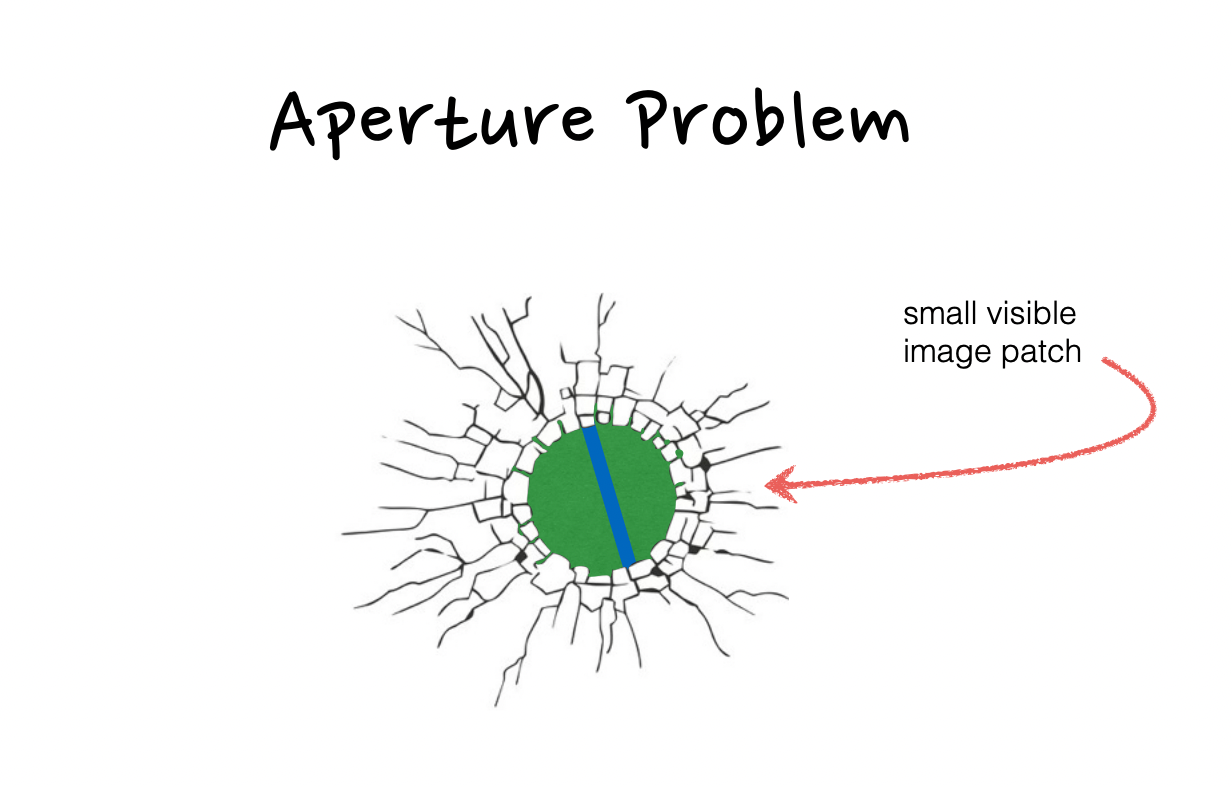

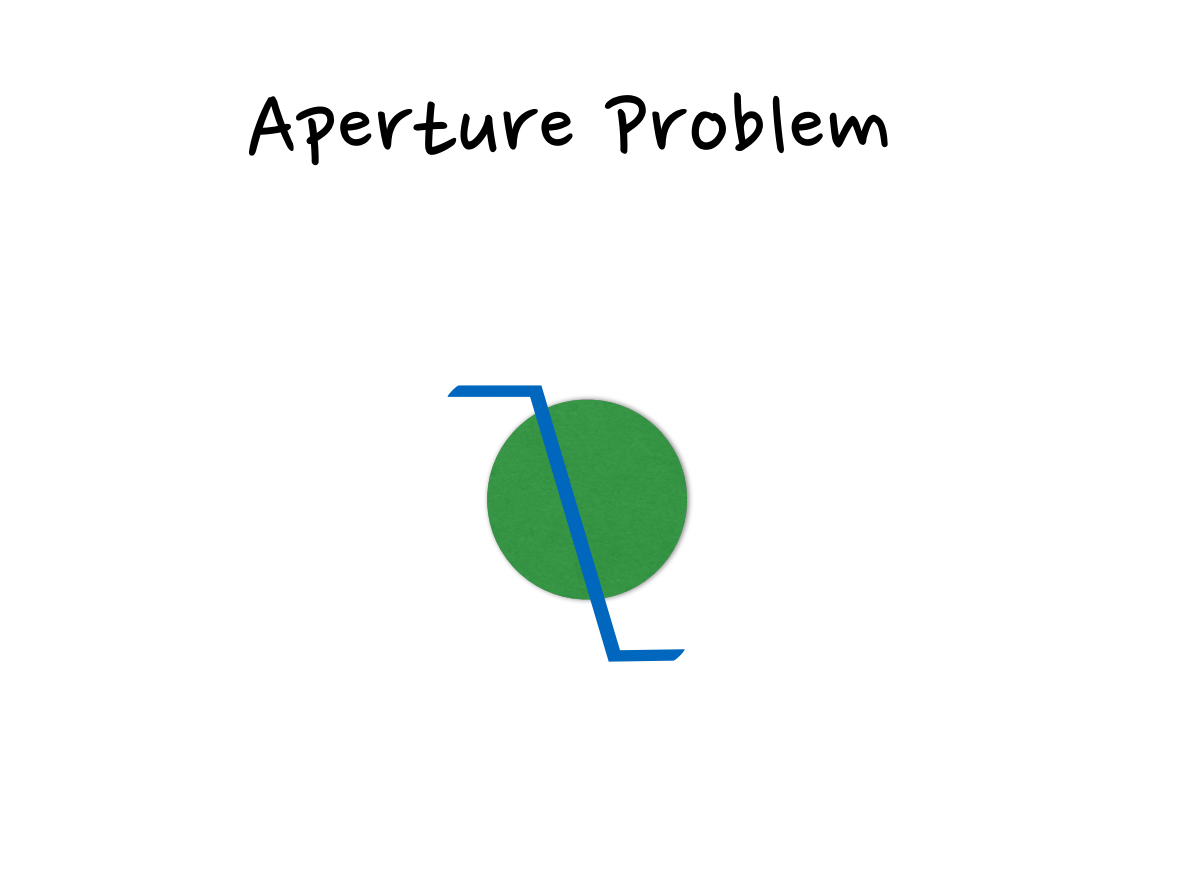

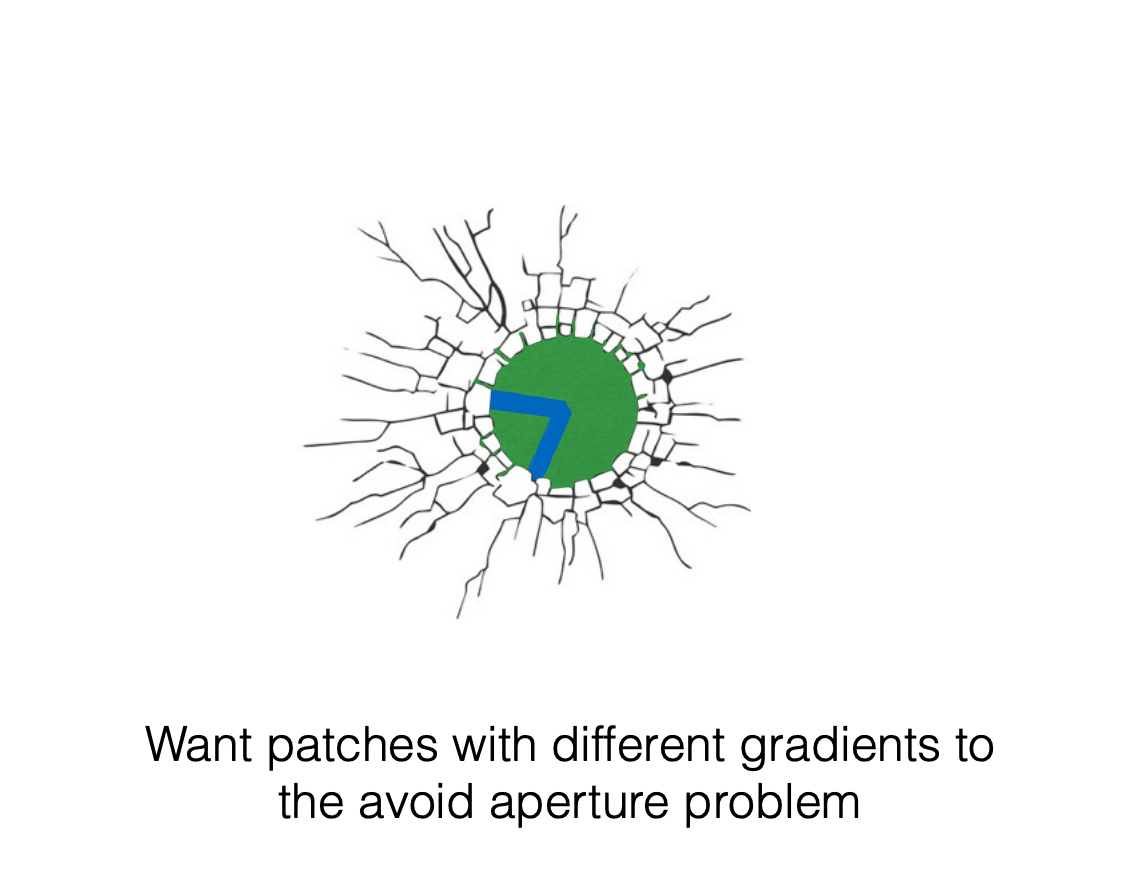

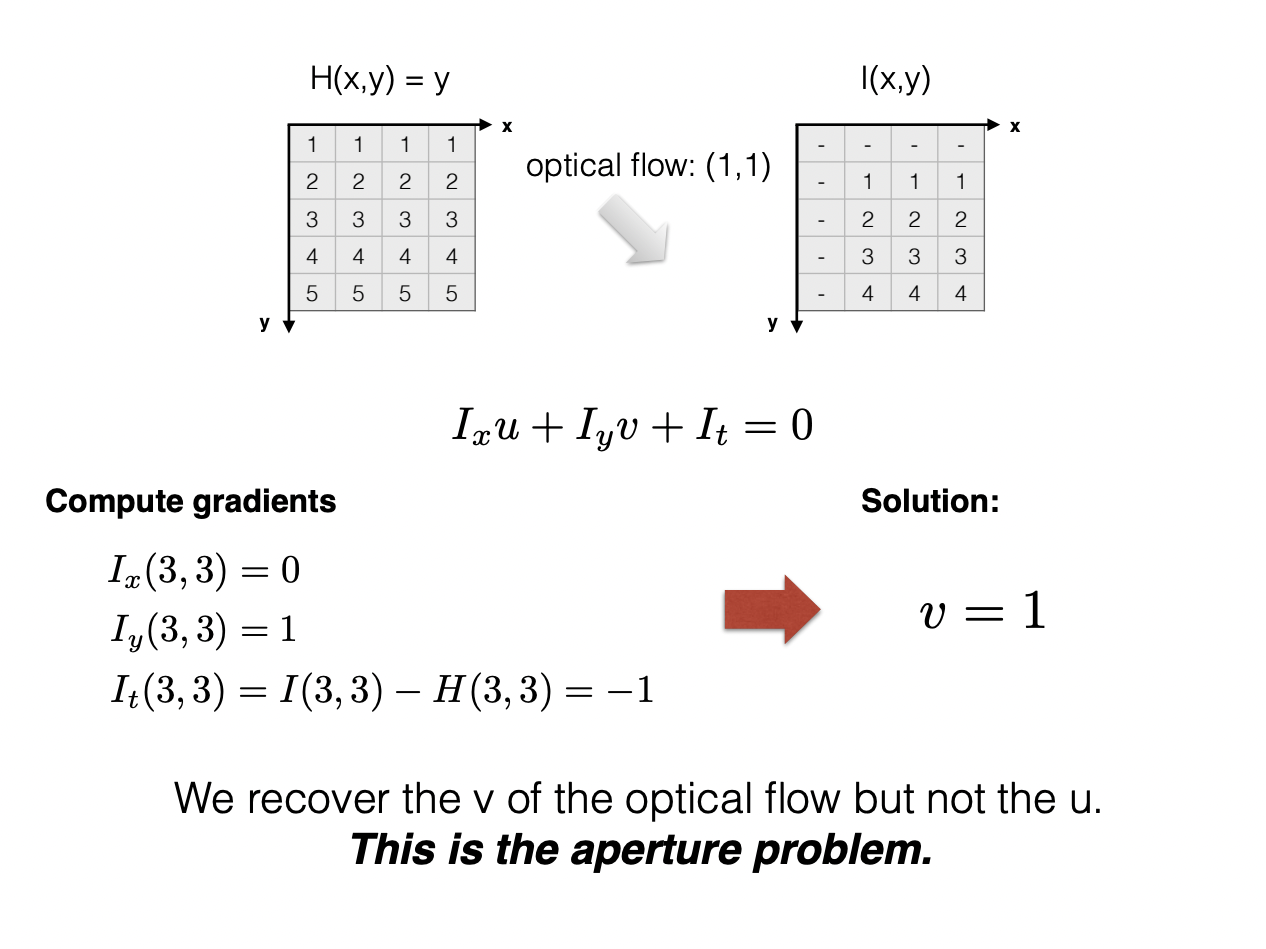

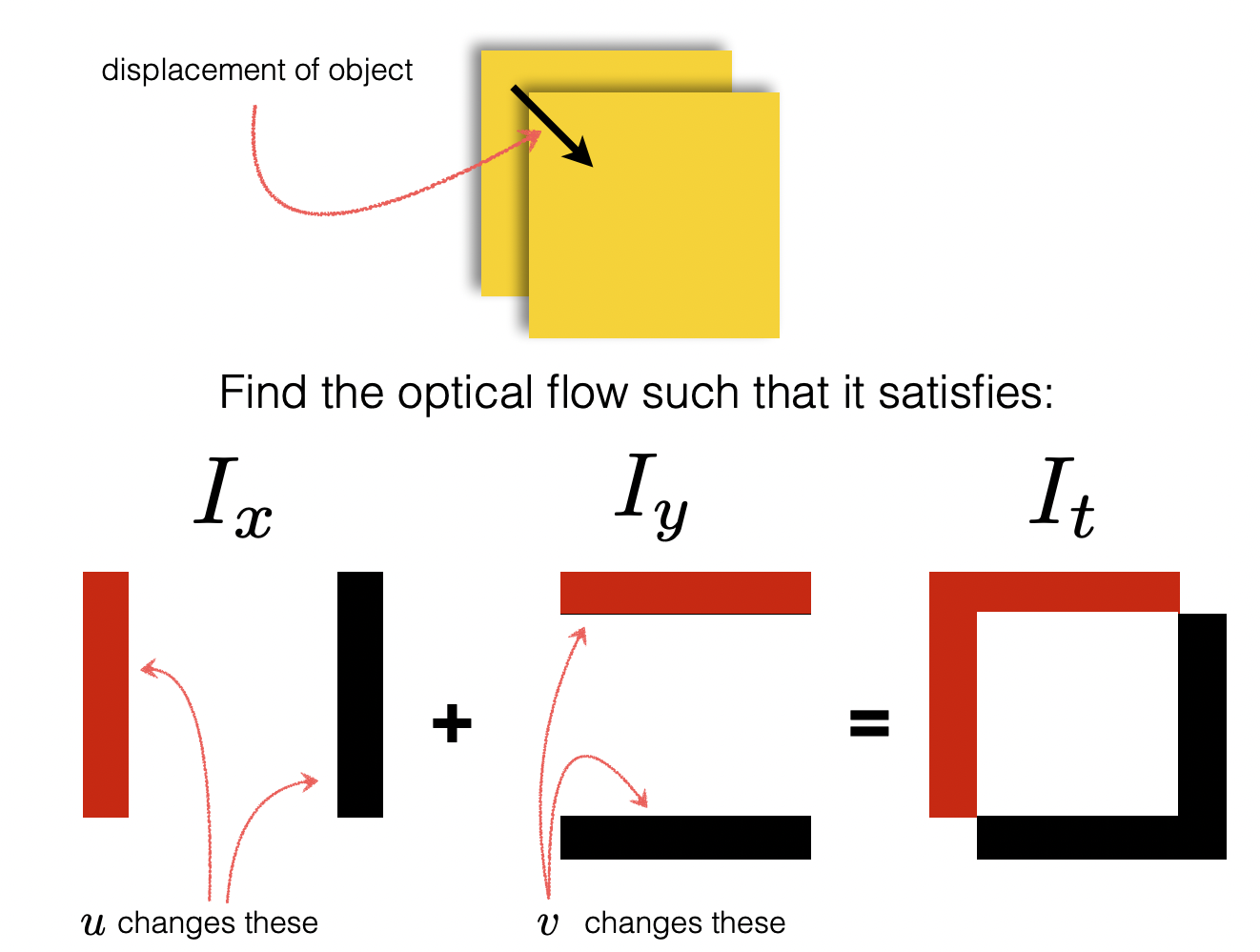

The Algorithm assumes spatial coherence between our two frames; pixels move like their neighbors. It assumes small but constant motion before and after our time step; pixels move in a single direction but they don't move very far. But mostly it assumes a constancy of brightness; pixels look the same in different frames, which is to say they have approximately the same color values. This defines a singular equation and its derivitives that we can factor and differentiate to understand flow from literally every angle.